Workflow-Driven Distributed Machine Learning in CHASE-CI: A Cognitive Hardware and Software Ecosystem Community Infrastructure

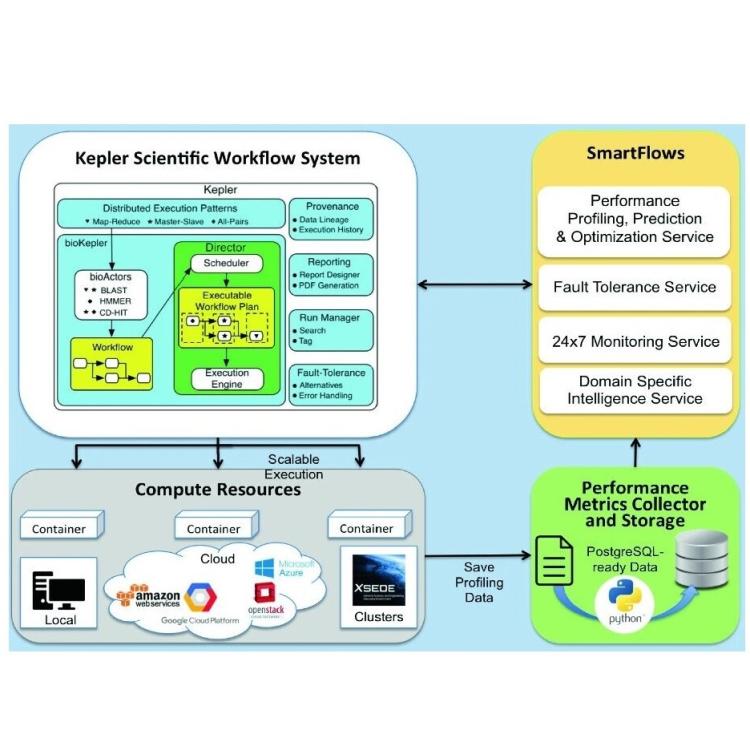

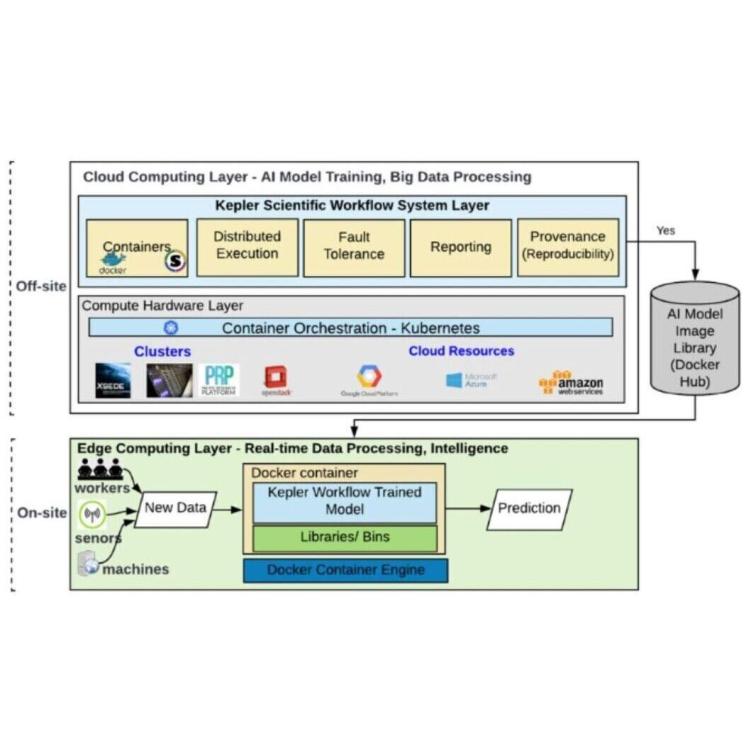

The advances in data, computing and networking over the last two decades led to a shift in many application domains that includes machine learning on big data as a part of the scientific process, requiring new capabilities for integrated and distributed hardware and software infrastructure. This paper contributes a workflow-driven approach for dynamic data-driven application development on top of a new kind of networked Cyberinfrastructure called CHASE-CI.