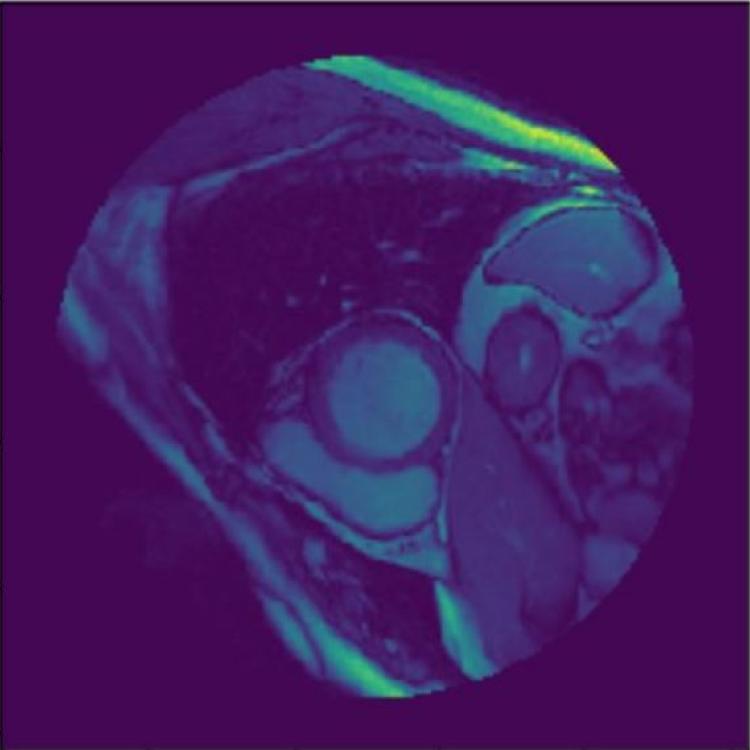

Left Ventricle Segmentation and Volume Estimation on Cardiac MRI Using Deep Learning

In the United States, heart disease is the leading cause of death for both men and women, accounting for 610,000 deaths each year. Physicians use Magnetic Resonance Imaging (MRI) scans to take images of the heart in order to non-invasively estimate its structural and functional parameters for cardiovascular diagnosis and disease management. The end-systolic volume (ESV) and end-diastolic volume (EDV) of the left ventricle (LV), and the ejection fraction (EF) are indicators of heart disease.